Following Key Topics to Cover in Corporate Apache

Kafka Development-

1) Introduction to Apache Kafka

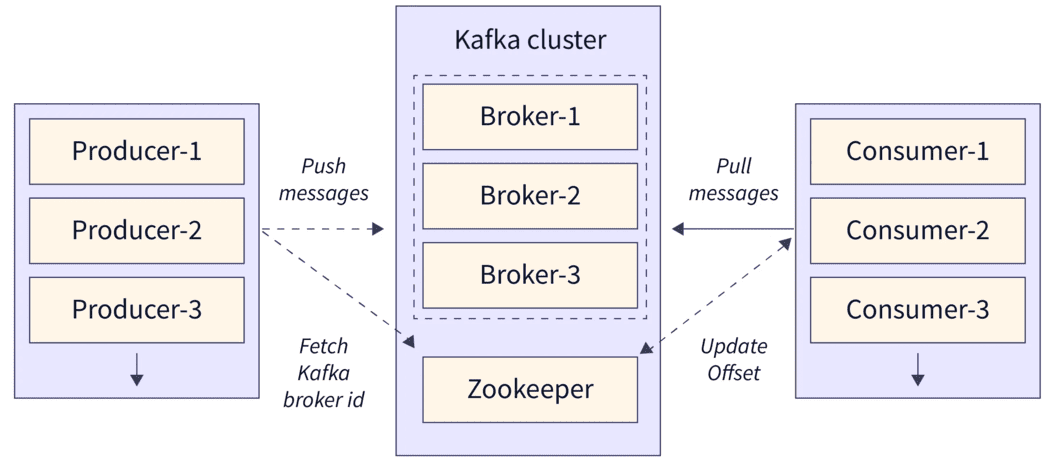

- Overview of Kafka Architecture

-

Core Concepts: Topics, Partitions, Producers,

Consumers, Brokers

- Installation and Configuration

- Understanding Kafka's Role in Event Streaming

2) Kafka Producer and Consumer

- Writing and Configuring Kafka Producers

- Consuming Data with Kafka Consumers

-

Handling Message Serialization and Deserialization

-

Configuring Kafka Consumer Groups and Partition

Assignments

3) Kafka Streams API

-

Introduction to Kafka Streams for Stream Processing

-

Building Stream Processing Applications with Kafka

Streams DSL

- Stateful Stream Processing with Kafka Streams

-

Testing and Debugging Kafka Stream Applications

4) Kafka Connect

-

Overview of Kafka Connect for Data Integration

-

Using Kafka Connect Connectors for Data

Import/Export

- Customizing and Extending Kafka Connect

-

Monitoring and Managing Kafka Connect Clusters

5) Kafka Administration and Operations

-

Cluster Deployment and Configuration Best Practices

-

Monitoring Kafka Clusters with Metrics and Logs

-

Scaling Kafka Clusters for Performance and Fault

Tolerance

- Backup and Recovery Strategies

6) Kafka Security

- Authentication and Authorization in Kafka

-

Securing Kafka Clusters with SSL/TLS Encryption

-

Using ACLs (Access Control Lists) for Authorization

-

Securing Kafka Connect and Kafka Streams

Applications

Mastering corporate Apache Kafka development equips

individuals to design, implement, and manage real-time

streaming data pipelines effectively. This curriculum

covers core Kafka concepts, producer and consumer

APIs, Kafka Streams for stream processing, Kafka

Connect for data integration, administration and

operations, and security best practices. By

understanding these topics, you can contribute

effectively to Kafka projects and enhance your career

prospects in the real-time data processing field.